WASHINGTON - Newsweek and the Daily Beast debuted a survey this week conducted by pollster Douglas Schoen.

The new arrangement appears to mark the end of a nearly two-decade partnership between Newsweek and the survey firm Princeton Survey Research Analysis International (PSRAI). And it may be bad news for those who care about full disclosure of the methodological details of public polls.

Consider first the level of detail typically provided by the Newsweek poll when conducted by PSRAI (interests disclosed: PSRAI CEO G. Evans Witt is a neighbor and friend). In addition to the details typically provided by virtually all pollsters -- sample size, margin of error and survey dates -- their most recent poll release in October 2010 included a "filled-in" questionnaire showing the full text and order of every question asked and percentage results for every answer choice offered, both overall and by key subgroups. It provides a detailed description of the weighting procedure and a breakdown of the number of unweighted interviews for each of the subgroups used to tabulate results. It also notes that the survey sampled both landline and cell phones.

Further, the last Newsweek/PSRAI poll provided a brief explanation of how "likely voters" were selected and a tabulation of the weighted results for the party identification for all adults and for registered and likely voters.

Compare that level of disclosure to what we get with the new Newsweek/DailyBeast/Schoen poll. Schoen's analysis provides the sample size, margin of error and survey dates, and a link to "full poll results" that points to 21 charts showing survey results in a Powerpoint-style slide show. In some cases the slides appear to include full question text, in others, only chart titles like "Obama approval" or "2012 Presidential Election - Three Way Horserace." Nowhere can we find an explanation of how the sample was gathered or weighted or results for party identification.

The Republican presidential primary candidate matchup (slide 13) does not even specify that the results were based on a subgroup of 517 likely Republican primary voters (The Daily Beast provided HuffPost Pollster with that information in response to a request earlier this week). As such, it also includes no explanation of how those likely voters were identified or modeled.

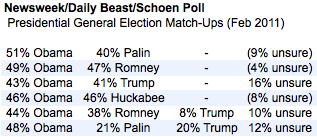

These details may seem wonky, but they can help us better understand and interpret the results. Consider the numbers for a series of 2010 presidential general election pairings tested between Barack Obama and various potential opponents, presented in the table below in the order they appear in the Daily Beast slide show.

The relatively close Obama-Trump match-up -- Obama leads by just two percentage points -- generated headlines elsewhere. Schoen's analysis notes the higher undecided percentage associated with the Obama-Trump question (16 percent), which he attributes to "the substantial degree of uncertainty [Trump's] prospective candidacy provokes."

Perhaps. But one aspect of the results are curious: Why would Obama's support be so much lower (43 percent) in a match-up against Trump, than against Romney (51 percent) or Palin (49 percent)? Trump had a net negative rating in a national Gallup poll conducted just four years ago. A WMUR/UNH poll of likely Republican primary voters in New Hampshire gave Trump 21 percent favorable, 64 percent unfavorable rating earlier this month.

The charts for the three Trump questions include the result for "unsure," while the others do not. Were the Trump questions the only ones to offer "unsure" as an explicit choice?

Oddly, Obama's share of the vote is slightly higher (48 percent) on the three-way choice against Palin and Trump than in three-way choice against Romney and Trump (43 percent), even though Trump does worse in the Romney matchup (9 percent). Is it possible these presidential horserace questions were asked on different "split samples" that might explain these odd inconsistencies?

When a pollster releases the complete, filled-in questionnaire the way PSRAI did -- one that is explicit about sample sizes and subgroups -- any reader can easily answer these questions. When the process is opaque, we are left to wonder and speculate.

I asked The Daily Beast to provide a copy of the questionnaire this morning, but they have not yet responded. We will update this article if we get a response.

PSRAI is a long-time member of the National Council of Public Polls (and CEO Witt currently serves as NCPP's president), but Newsweek is not. NCPP's Principles of Disclosure call for the routine publication of the size of the sample and subsamples and of the "complete wording and ordering of questions mentioned in or upon which the release is based." The old Newsweek poll always met those standards. Let's hope the new Newsweek poll does better next time.