Can (or should) you trust technology? It's a question often accompanied by a foreboding feeling, as if the Internet itself were encircling you in its web, just waiting to pounce on any inadvertent error you might make in safeguarding a password or social security number. We've all seen the failure of corporate software meant to protect our personal information and the email communications from the ubiquitous Nigerian uncle who has left us money to be transferred into our bank accounts if we'll only send the routing numbers.

Although examples like these suggest we're entering a perilous new world when it comes to figuring out whom (or what) we can trust, the basic issue of whether to trust technology has in fact been around for hundreds of years. In the past, trusting technology usually meant wondering whether you could count on it to work correctly. If Columbus' quadrant didn't measure angles accurately, he might never have successfully navigated his ships to the New World. But more and more, trusting technology doesn't only involve issues of mechanics; it's not only about whether a widget will malfunction or circuit will short. Today, technology is encroaching on what had until recently been a solely human domain: social interaction. And with that advance comes an entirely new dilemma: Can you trust the motivations of the automated entity -- virtual agent, computer avatar, or even humanoid robot -- in front of you?

What do I mean by motivations? Exactly what you think. Does the entity, or more accurately the person who designed it, intend it to cooperate with or exploit you? If you remove the technological element, finding the answer to this question poses a familiar challenge. It's a dilemma most of us face every day in deciding whether or not to trust someone new. Trust, after all, is a double-edged sword. While it's true that you can accomplish more by cooperating with others than you ever could alone, deciding to trust others also makes you vulnerable. If a partner acts selfishly and cheats, he can gain much at your expense.

Given trust's importance to success, it should come as no surprise that the human mind has developed ways of reading the body language of potential partners. As my research team has shown, the cues the mind uses to detect dishonesty are actually different from what people think (and that's why even programs used by the TSA to identify dishonesty have been shown to be virtually useless). But the fact that our minds can detect untrustworthiness at better than chance levels isn't the most fascinating part of the story. Rather, it's that our minds will use the same cues to guess the motives of non-living, humanoid entities.

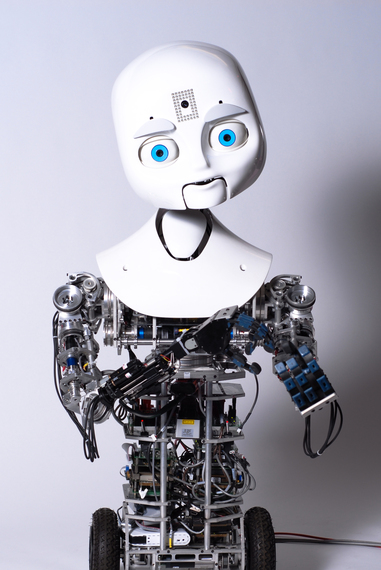

To prove the point, Robert Frank, David Pizarro, and I partnered with Cynthia Breazeal at the MIT Media Lab to design an innovative experiment. We programmed the robot Nexi -- which Brezeal designed -- to emit specific sets of nonverbal cues while it conversed with participants for 10 minutes each in a "get-to-know-you" setting (you can click here to see an example of Nexi in action). Half the participants saw Nexi express cues that we had previously found predicted untrustworthy behavior in humans (e.g., crossing arms, touching the face). The other half saw Nexi express more neutral cues during the interaction.

What happened? Exactly what we expected. Although all the participants reported enjoying the interaction with the robot, those who had witnessed it express the untrustworthy cues not only reported trusting it less, but also expected that Nexi was more likely to cheat them in a subsequent game involving real money. And as a result, they decided to treat the robot less fairly in the monetary exchange as well.

The implications of this finding couldn't be clearer. Technology has reached the point where it can mimic human expressions closely enough that our minds will automatically respond. It can "ping" our trust machinery -- the unconscious mechanisms our minds use to decide whom to trust -- and in so doing, manipulate us without our even being aware of it. You see, the reason our "trust machinery" works is that potential partners, assuming they're human, can't completely control their body language. Cues to their internal motivations always leak out. But when it comes to robots or virtual agents (like those employed by many e-commerce sites), the same rules don't apply. There is no leakage. The movements of a robot or virtual agent on a computer screen can be completely controlled. And with complete control comes not only the ability to divorce nonverbal cues from real intentions but also to manipulate perceptions in ways better than any conman could.

Of course, the influence offered by such social technology isn't inherently problematic. Like all of scientific advancement, its value depends on the goals of those who use it. For technological innovators like Cynthia Breazeal, the ability to allow a robot to connect with and be trusted by others aids immensely in designing them for compassionate purposes such as to accompany children for medical procedures where parents cannot (e.g., radiation treatments for cancer). But in the hands of others possessing nefarious motives, use of such entities could be a potent weapon. Trust sells. Remember that next time you're interacting with an automated agent online.

Adapted from The Truth About Trust