WASHINGTON -- How low can they go? A new study from the Pew Research Center released on Tuesday finds that poll response rates continue to fall "dramatically," reaching levels once considered unimaginable.

Yet the study also finds evidence that on most of the wide variety of measures tested, the declining response rates alone are not causing surveys to yield inaccurate results.

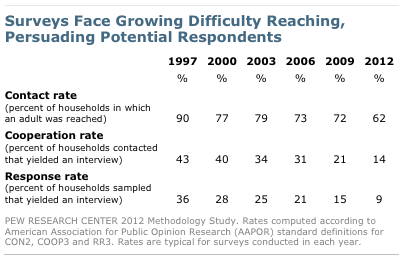

In just 15 years, according to the report, typical response rates obtained by the Pew Research Center have fallen from 36 percent in 1997 to 15 percent three years ago to just 9 percent so far in 2012. The most recent decline results partly from the inclusion of cellphone numbers in its samples in order to reach the rapidly growing number of American adults who have a mobile phone but lack landline telephone service. But the Pew Research landline response rates have also fallen (from 25 percent in 2007 to 10 percent this year) and are now only slightly higher than the response rates currently achieved with cellphones (7 percent).

The report released today is based on the third iteration of a study, first conducted in 1997, that involves two parallel surveys: One uses the standard, five-day field period and methodology used by the Pew Research Center, and the second makes a much greater effort to obtain the highest possible response rate. The object is to see whether the lower response rates make a difference, or at least whether a far more rigorous survey would obtain different results.

The challenges that face modern polling are perhaps most evident in the effort expended and results achieved by this year's "high effort" survey. Interviewers called households up to 25 times for landline phones and up to 15 times for cellphones over a period of two and half months, if necessary, in an attempt to make contact. They also sent advance letters, where addresses were available, that explained the survey and offered incentives to participate (ranging from $10 to $20). For households where potential respondents initially refused to participate, an interviewer "particularly skilled at persuading reluctant respondents" made follow-up calls.

Despite all that additional effort -- far more than expended on any typical media survey -- the more rigorous survey obtained a response rate of just 22 percent, less than half the rate achieved just nine years ago (50 percent).

The purpose of all this work was to determine whether declining response rates are causing surveys to yield less accurate results. On that score, the news was mostly good: "[T]elephone surveys that include landlines and cell phones and are weighted to match the demographic composition of the population," the report concludes, "continue to provide accurate data on most political, social and economic measures."

Yet while accuracy remains on "most" measures, the report also emphasizes that response rate declines "are not without consequence." When compared to more reliable government surveys, the study found telephone survey respondents to be "significantly more engaged in civic activity than those who do not participate." Specifically, the telephone respondents were more likely to have volunteered for an organization in the past year (55 percent vs. 27 percent), more likely to have contacted a public official in the past year (31 percent vs. 10 percent) and more likely to have talked with their neighbors in the past year (58 percent vs 41 percent).

The report checked the accuracy of results in three ways:

- Results from the standard Pew Research survey were compared with identically worded questions on large-scale U.S. government surveys that achieve response rates of 75 percent.

- Both respondents and non-respondents were matched to national commercial databases on "a wide range of political, social, economic and lifestyle measures" to identify statistical bias.

- Finally results of the standard methodology Pew Research survey were compared with the higher-effort survey, again with an eye toward measurements that differ significantly when response rates are lower.

The good news, again, is that only a handful of these comparisons yielded notable differences, and those involved the greater tendency of telephone respondents to engage in civic activity.

Of particular consequence to political surveys is that households that responded to the survey were more likely to have voted in the 2010 election (54 percent) than those who did not respond (44 percent). This finding is important to efforts by pollsters to screen for the most likely voters. It shows that to some extent, the screening process is baked into the respondents' decision to participate in the survey.

A reassuring finding for those who follow pre-election polling is that registered Republicans and Democrats "have equal propensities to respond to surveys." Similarly, the mix of Democrats and Republicans was roughly the same in both the standard and high-effort surveys. So a lower response rate, in and of itself, should not automatically skew a survey to either party.

The study also found that respondent households were just as likely as non-participating households "to be heavy internet users, newspaper or magazine readers, prime time TV watchers, or radio listeners."

The sharp and truly dramatic decline in survey response rates makes this sort of study essential. In the future, more pollsters will likely incorporate some of its methods -- such as obtaining richer data on both respondents and those who refuse to participate -- as standard procedure to identify and correct skews in their samples as they occur.

At very least, the latest Pew Research report should be required reading for anyone who relies on political polling data.