When I taught research methods and statistics to graduate students at Brooklyn College, if a student submitted a project like the daily and weekly political polls featured during the recent presidential campaign, the student would receive a low grade or fail the course. I would explain to the student that at best his or her poll was what is called a pilot investigation--a work in progress--to identify the issues and obstacles for designing a valid piece of research.

The same can be said about many of the political polls.Their primary flaw was in the critical first link in the chain: the sampling, which refers to how the pollsters selected the people they queried and how many participants were in the final samples from which conclusions were drawn.

If the purpose of a poll was to assess preferences or intentions to vote for Donald Trump or Hillary Clinton in a particular state, the sample should have had a sufficient number that included the diversity of the voting population--by age, religion, ethnicity, political affiliation, education, and income. Many samples did not. For example, according to the Independent Voter Network (IVN), the CNN polls did not have adequate representation of 18-34 year old voters, a demographic of 75 million, the largest living U.S. generation; and Fox News polls notably under-sampled independent voters.

A striking example of inadequate sampling was the Nevada Suffolk University poll conducted in August 2016. It surveyed "500 likely voters" on a variety of issues, including who they intended to vote for among the five candidates on the Nevada ballot--pus two additional choices: "none of these candidates" and "undecided." The results of this flawed poll was boldly headlined by CNN: "Nevada Poll: Clinton and Trump Neck and Neck."

Despite faulty sampling and other possible deficiencies some polls did correctly predict the winner in many states and nationally. But this is not a vindication of the polls or necessarily something to cheer about. After all, there were only two viable candidates. Place the name Donald Trump on one piece of paper and Hillary Clinton on another and put the papers in a hat. Then ask a few thousand people to pick the winner out of the hat. In line with probability about 50 percent of the picks will be correct. Similarly, place different narrow margins of victory for one or the other candidate (which historically is usually the outcome) in the hat. Many of the picks will be correct, some will be close to correct, and the ones that miss by a few points could be interpreted as close when margin of error is factored in. But these outcomes can only be determined after the fact. For legitimate discussion about an ongoing political race during the campaign it is essential to have trusted assessments based on valid research.

The average size of poll samples is 1,000, says polling report.com. Obviously, as few as 500, as in the Nevada poll, is not sufficient to predict a trend for the population of an entire state. For predicting a national trend even a sample of 1,700 (as in several Pew polls) may be inadequate.

When looked at from a scientific perspective, is it any wonder that most of the polls missed the mark?

The Washington Post reported that an analysis of 145 national and 14 state polls conducted during the week before the election "consistently overestimated Clinton's vote margin against Trump." Jon Krosnick, Professor of Political Science at Stanford university, told the Washington Post that he wasn't surprised at the inaccuracy of state polls because of flawed sampling; he added, "most state polls are not scientific."

And these polls look even murkier when their faulty sampling is probed further.

Polls typically use the highly respected technique of "random sampling." But none of the 2016 political polls actually accomplished random sampling, despite what may have been the intention of the pollsters. In random sampling a population for study is identified, such as all the registered voters in a state, or for a national poll all registered voters in the nation. The researcher then selects at random, let's say, every 20th person in a list or telephone directory for that entire population. The assumption is that the random selection is highly likely to include the range of diversity within the population, thus resulting in an unbiased and inclusive sample.

A key factor that defines a truly random sample is that the researcher or pollster picks the sample, as in selecting every 20th person. All well and good. But what the polling reports don't tell you is that when pollsters call or canvass every 20th person by telephone, most hang up, don't answer, or refuse to participate.

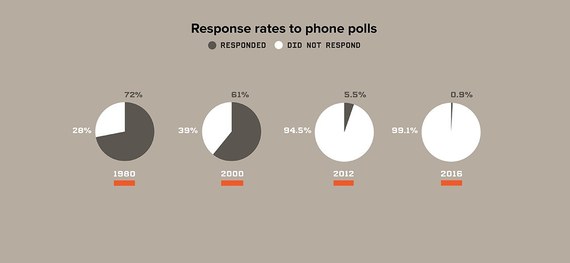

The American Association for Public Opinion Research reports that people are increasingly unwilling to participate in surveys. Wired Magazine has reported a dramatic decline in response to telephone polls from 72 percent response rate in 1980 to 0.9 percent in 2016 (Pew reported a low of 8 percent by 2014). Wired traces the sharp decline over the last decade to the widespread use of cell phones. Pollsters favor robo-dialing to landlines. But "By 2014, 60 percent of Americans used cell phones either most or all of the time, making it difficult or impossible for polling firms to reach three out of five Americans."

So samples with low response rates were not random. The pollsters didn't select the samples, the samples selected the pollsters.

The rejection rate raises serious questions of possible bias. Who were the ones who agreed to participate? How do they differ from those who refused to participate? How many of the responders will actually vote? In the 2016 presidential election, 90 million eligible voters didn't cast a ballot. Do the ones who participated in the poll represent a particular bias that doesn't speak for the population as a whole? We don't know--nor do the pollsters. Such a sample is not random. It's biased--but we don't know in what ways it's biased. Therefore, no valid conclusions can be drawn about the choices or preferences of the population as a whole.

A national poll would obviously require a larger sample than a state or city poll, since it includes greater diversity in addition to a larger target population. While there is no fixed rule for how large is large enough, it should be large enough to include the diversity of factors in the population under study.

Pollsters aim for a plus or minus 3 percent or less margin of error. Translated into election results, a poll showing support of 47 percent for a candidate with a 3 percent margin of error means that that actual support lies between 44 and 50 percent. The larger the sample the greater the probability of achieving a smaller error figure. Many polls claim a 3 percent margin of error. But to genuinely achieve a low margin of error for state populations--and surely for national surveys--many thousands of participants would be required. Even then, the inclusiveness or not of diversity within the sample would affect sampling error.

Pollsters apply the statistical technique of weighting, to correct or compensate for small samples in which some groups may be under-represented. But each layer of artificial corrective procedure increases the margin of error and lowers the probability of reliable results.

Why did the cable networks give so much airtime to worthless--or at best highly questionable-- polls? Did the anchors and panels that riffed on them really believe they were offering "breaking news"?

More likely, networks were desperate for something--anything--"new" to fill the hours of news that the public craves and has come to expect. Every "new" poll--"Hillary is up a point," "Trump is now tied," "Trump just surged ahead two points," "Hillary is gaining in Texas"--enabled newscasters to assemble panels of "experts" to analyze the latest overnight poll.

So how should the public--not to mention the media--assess poll information? The National Council on Public Polls (NCPP) lists twenty questions journalists should ask.

A good rule of thumb for everyone: Don't pay attention to a poll unless it reports the size of the sample, the participation or rejection rate, how the sample was drawn, and evidence that the pool from which the sample was drawn included the diversity within the target population as a whole. With that information you can then decide if the poll should be taken seriously. If they are like many of the ones rolled out during the 2016 campaign you will likely conclude that their reported results are unscientific and fatally flawed.

Perhaps there is a better way to get quick assessments. But let's not call it science. Call it observation and intuition.

Months before the 2016 presidential election filmmaker and political advocate Michael Moore predicted a Trump victory. I, like many others, thought he was saying that to scare millennials into voting. But it turns out that not only was Moore correct about the outcome of the election, he meant what he said. His observations and intuition were on the mark.

Moore comes from the Midwest. He sensed the mood of factory workers and shoppers in supermarkets, malls, and elsewhere in the community where ordinary folks gather. He grasped their pain, anger, and fear about being left out. He sensed that despite all their distaste for Trump's behavior they would vote for him as a protest against the establishment. Trump seemed to know them, they felt. In his rallies and media appearances he gave them hope that he would deliver on his promises to bring back manufacturing and boost the economy for disenfranchised workers.

With Michael Moore in mind, we might do well to throw out the polls and instead talk to hairdressers, bartenders, waiters, and others--like cops and cabdrivers--close to people in the trenches of daily living.

In the next election cycle, let's have a face-off between a panel of these community intuitives and panels of cable network commentators, basing their predictions and analyses on political polls.

Who would Las Vegas odds-makers favor?

Bernard Starr, PhD, is Professor Emeritus at the City University of New York (Brooklyn College) where he directed a graduate program in gerontology and taught developmental psychology in the School of Education. His latest book (expanded edition) is "Jesus, Jews, and Anti-Semitism in Art: How Renaissance Art Erased Jesus' Jewish Identity and How Today's Artists Are Restoring It."