Server downtime can be costly. We've seen in the news that online gaming servers that power the popular games EVE Online, SimCity or Xbox are going down left and right, but what about the systems that enterprises rely on to conduct high volumes of business? Companies around the globe depend on having access to stable server infrastructures and cloud services like Amazon Web Services to conduct business, power ecommerce sites and fuel application development.

In fact, the average cost of downtime is estimated to cost $5,600 per minute according to the Uptime Institute Symposium. The report, titled "Understanding the Cost of Data Center Downtime: An Analysis of the Financial Impact of Infrastructure Vulnerability," was based on a recent Ponemon Institute study, "Calculating the Cost of Data Center Outages." The research identified costs across 41 data centers in varying industry segments; the data centers studied were a minimum of 2,500 square feet, so as to identify the true bottom-line costs of data center downtime.

CIOs better sit up and take notice.

Data center managers already know that system downtime can be very expensive for a company, but they may not know the real extent of that expense when servers, networking and storage suffer a major outage. According to the report, the average incident length was approximately 90 minutes, resulting in an average cost per incident of approximately $505,500. For a total data center outage, which had an average recovery time of 134 minutes, average costs were approximately $680,000.

In his Wednesday presentation at GigaOM's Structure conference, AppliedMicro President and CEO Paramesh Gopi said hardware builders must "future proof" their products. Companies like AppliedMicro, which produces semiconductors, will have to show server builders that they can contribute to a malleable product.

"Software drives hardware. We are no longer at the point where we have Windows and an x86 architecture drive innovation," Gopi said. "We need to have a little more cognizance of what will happen two, three years from now."

Need for IT Monitoring Technologies

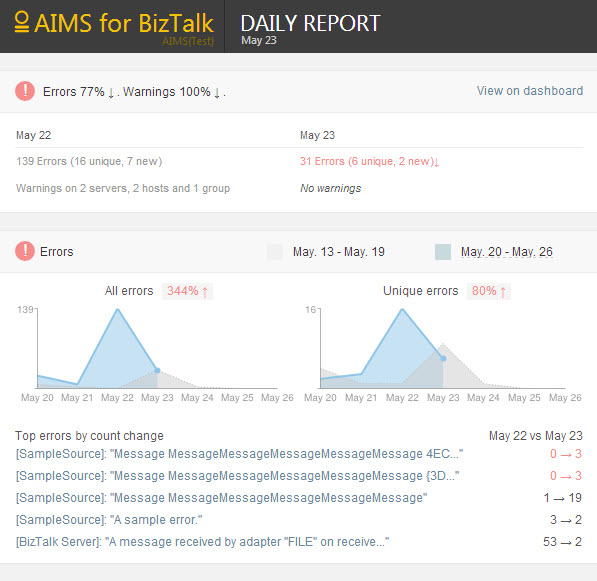

IT system monitoring has emerged as a tool to help reduce downtime but up until now, IT departments are working in the dark when figuring out the baseline business traffic thresholds and traffic patterns in order to set up monitoring. On Monday, the intelligent IT monitoring company AIMS Innovation introduced a new technology that integrates automatic threshold setting, resulting in an up to 50 percent increase in IT operations efficiencies.

"Enterprises are failing to monitor the most critical component in the IT architecture - the integration platform," said Ivar Sagemo, CEO of AIMS Innovation. "AIMS Innovations' solution will completely change the way companies monitor this critical part of enterprise infrastructure and essentially eliminate all downtime related to the integration platform. This keeps productivity high and saves companies substantial money."

Think of it as a self-learning algorithm for system admins to monitor and manage server loads, to keep networks up and running smoothly. AIMS Innovation's technology enables ongoing self-optimization, meaning the software learns the enterprise systems' normal behavior and modifies alerts over time, becoming more accurate to potential problems that can lead to downtime.

Even if an enterprise system is up 99.5% of the time, this still translates to almost 44 hours a year of downtime. Meanwhile, Aberdeen Group found that between June 2010 and February 2012, the cost per hour of downtime increased an average of 65%.

A USA Today survey of 200 data center managers found that over 80% of system managers reported that their downtime costs exceeded $50,000 per hour. For over 25%, downtime cost exceeded $500,000 per hour.

Integration costs will exceed application costs.

As CIOs work to keep systems running smoothly in order to prevent downtime, yet another challenge emerges from an unexpected direction - the components themselves. As enterprises grow, they rely on complex, extensive IT infrastructure to keep traffic and data flowing smoothly from one component of the enterprise to another from database to servers to the cloud. With each year, the task of linking these disparate systems together has remained relatively straightforward, however with the introduction of the cloud and the bring-your-own-device (BYOD) environment, systems integration costs now exceed the costs of individual components. The industry challenge will be to keep IT costs down, yet maintain networks sufficiently to prevent downtime.