This year's Democratic primary in Michigan marked one of the biggest errors in polling history.

Combined polling averages showed Hillary Clinton leading by 18 percentage points or more, but she still ended up losing to Bernie Sanders by 1.5 percentage points, setting what may be a new record for the biggest upset against polling averages.

The 2015 U.K. general election was projected to be very close, with few seats in parliament won or lost for Conservatives or Labour. Instead, Conservatives surprised nearly every pollster with a 6% lead, winning enough to gain back a critical majority in parliament.

There have been other high-profile misfires--in Israel, Greece, and some closely watched U.S. Senate contests during the 2014 midterm elections. Even Nate Silver, famed statistician and editor in chief of FiveThirtyEight, thinks "the world may have a polling problem."

Polling is teetering on the edge of disaster. Or so we've been hearing a lot recently.

But hold the phone. The overall accuracy of U.S. pre-election polling has held steady for the last 15 years. While polls missed a handful of Senate races in 2014, the overall accuracy of polls for all statewide contests that year was roughly in line with previous midterm elections. And there is no evidence that polls are producing more variable results than they have in the past.

Beyond the debate over polling's accuracy, however, one thing is certain: We have entered a new era of polling, ushered in by an upheaval in communication technology that has already changed the way polls are conducted…So far, this new era is less about any single new approach to collecting data, but rather the way pollsters have adapted their techniques to deliver representative and reliable data.

To understand the changes now underway and their implications, consider the way polling has evolved through distinct eras over the past 100 years.

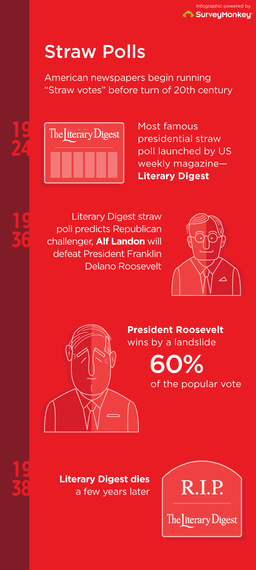

Straw Polls

American newspapers first started running straw polls of various kinds (sometimes called "straw votes") just before the turn of the 20th Century. Some involved ballots printed in newspapers and returned by readers. Some involved door-to-door canvassing. What they all had in common was an effort to collect preferences of as many voters as possible without any effort to assure that those who responded represented the larger electorate. The most ambitious, highly-regarded—and ultimately infamous—involved paper ballots sent to millions of Americans nationwide via the U.S. mail weekly magazine, Literary Digest.

Starting in 1924, the Digest mailed out printed "ballots" (all hand-addressed) to 10 to 20 million households that they asked recipients to fill out and return (the ballots post-cards also included a subscription solicitation).

The names and addresses came from a wide variety of lists, though most were culled from telephone directories and automobile registries in an era when both sources skewed disproportionately to upper income Americans.

The Digest's editors made no effort to statistically adjust or weight their results, relying instead on the sheer volume of responses to produce an accurate result. The Digest’s straw polls earned a reputation for accuracy because they correctly forecast the winners of the 1928 and 1932 presidential elections. But even then, there were problems evident beneath the surface--the Digest's results sometimes overstated the Republican share of the vote and occasionally erred in individual states.

Then came 1936. The Literary Digest straw poll predicted that Republican challenger Alf Landon would defeat President Franklin Delano Roosevelt by a 13 percentage point margin (54 to 41%). Instead, Roosevelt won by an even larger, 24-point landslide (61 to 37%). The failure led to the demise of not just straw polling, but of the Literary Digest itself a few years later.

To this day, many blame the Literary Digest's failure entirely on the non-representative nature of telephone and automobile owners of the 1930s. In recent decades, however, historical data has revealed another, possibly more significant problem, something pollsters call "response bias": Roosevelt voters were simply less likely to return their ballots than supporters of Landon voters.

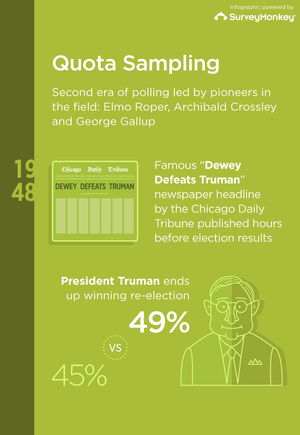

Quota sampling

The Literary Digest debacle ushered in a second era led by polling pioneers Elmo Roper, Archibald Crossley and, most famously, George Gallup, who adopted new methods to correct for the flaws they perceived in the straw polls. To obtain what they argued were representative samples, they sent out trained interviewers to obtain the cooperation respondents across all demographic and economic categories and conduct face-to-face interviews.

Although explicitly described as "scientific" by their proponents, these newer methods did not involve random sampling. Instead, Gallup and his peers sent out interviewers to speak to prescribed numbers of respondents in each income and demographic category within set geographical areas, a method now known as "quota sampling."

Polls using this methodology proliferated in the 1940s despite some nagging problems. As described by David Moore in his book, The Super Pollsters, interviewers in that era had considerable discretion in how and where they chose to find respondents to meet their quotas.

Some would visit parks, construction sites or other outdoor areas in search of respondents who looked like they might fill their quotas. Others might look for respondents who seemed easier to interview, which created another potential for bias.

Then came 1948…

At the end of the campaign, the three preeminent pollsters—Gallup, Roper and Crossley—all confidently predicted that Thomas Dewey would defeat President Harry Truman by margins ranging from 4 to 15 percentage points. Their polling and prognostication helped create a nearly unanimous conventional wisdom anticipating a Dewey victory.

That belief helped produce one of the biggest blunders in polling history. On election night, the Chicago Daily Tribune declared in a bold-faced headline "Dewey Defeats Truman," just hours before complete vote tallies found that President Truman had been reelected by a 5 percentage point margin nationwide.

Even today, the image of Truman grinning as he holds aloft the paper and headline is iconic, a symbol of the unpredictable nature of election polling.

Two academic panels investigated what went wrong in 1948 and found many reasons for the misfire. While the pollsters themselves concluded they had underrated the importance of undecided voters and had missed late shifts toward Truman by not conducting last minute polls, the academic experts also attacked the use of quota sampling.

The committee formed by the Social Science Research Council stopped short of blaming sampling methodology for missing Truman's win, but they did find "considerable systemic error" in quota samples that consistently underrepresented the less-well educated. They urged pollsters to adopt random "probability sampling" in their future work.

Probability sampling

One 1948 survey correctly forecast the Truman victory. It wasn't even the point of the survey--it was an academic study on attitudes on foreign policy that added on two vote preference questions at the last minute. Nevertheless, the Survey Research Center (SRC) at the University of Michigan fielded their study in late October, completing just before the Election and used a more expansive "random probability" sampling method.

The sampling methods used by SRC had been developed by other academics in the 1930s. They involved random sampling clusters of counties across that country, then of neighborhoods within the selected counties, then of individual households within those neighborhoods and specific respondents within each household. Selections were made randomly at each step of the process, ensuring that every member of the U.S. population had an equal or known potential to be selected.

The success of the SRC poll gave greater visibility to both its sponsor and the random probability sampling method, which soon became the gold standard for survey research. The news media pollsters gradually shifted from quota to probability sampling and the SRC scholars at Michigan launched their biennial Election Studies that became the preeminent, ongoing academic survey on political attitudes in the United States, now known as the American National Election Studies (ANES).

Multi-stage random probability samples of individuals interviewed in-person remain the heart of the high quality, scientific surveys conducted by the U.S Census and other government statistical agencies to this day, such as the Census Current Population Survey (CPS) and American Community Survey (ACS).

In the realm of elections, pollsters continued to conduct in-person random sample surveys until the late 1970s, when a key technological evolution kicked off yet another era of reinvention.

Telephone surveys

The Literary Digest failure left most pollsters wary of conducting polls by telephone. As late as 1960, a quarter of Americans still lacked home telephone service, so phone polls would continue to skew toward higher income respondents.

These attitudes started to change in the 1970s, when telephone coverage finally grew to more than 90 percent of U.S. households, and the best known pollsters started experimenting with telephone polling. By the mid-1980s, nearly 95% of Americans had home telephone service, and partly because the percentage was even larger among voters, most election polls were conducted by telephone.

Phone surveys offered a number of advantages over those conducted in person. They were faster and less expensive and could be conducted in a central facility, with all interviewers carefully supervised and trained to follow highly standardized procedures. Telephone numbers also offered the chance for sampling that was even closer to truly random (and thus more accurate) than the "cluster" designs used for in-person surveys. Samples could be drawn from a pool of all assigned numbers, with the last digits randomized to allow access to all working telephones, whether listed or unlisted.

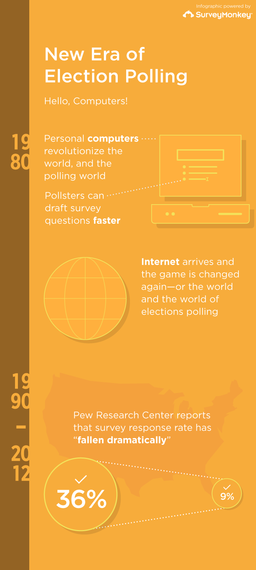

The introduction of personal computers in the 1980s helped further disrupt the field. The availability of low cost PCs made it possible to quickly tabulate survey results without having to spend small fortunes buying time on mainframe and minicomputers. The new technology also enabled pollsters to draft survey questions more quickly and transmit the text via modem (and eventually, via the internet) to specialized call centers who would eventually send digitized data back through the same channel. Networked PCs also lowered the cost of computer-assisted interviewing, allowing call centers to abandon hand-dialing and paper-and-pencil questionnaires and execute surveys more quickly and efficiently.

All of these changes led to a rapid proliferation of telephone polls over the past 30 years, which quickly became ubiquitous in the news media and in political campaigns at all levels, national, state and local. National surveys conducted by news organizations such as ABC News, CNN, Fox News, the Washington Post and the New York Times continue to be conducted to this day by telephone.

The New Era?

At this point, our story takes a different turn. The technology revolution that helped make polling omnipresent has also gradually but inexorably eroded the conditions that enabled random sample surveys by telephone.

The changes were best described six years ago by Jay Leve, the editor and founder of SurveyUSA, a company that conducts phone surveys using an automated, recorded voice methodology. All phone polling, Leve argued, depends on a set of assumptions:

You're at home; you have a [home] phone; your phone has a hard-coded area code and exchange which means I know where you are; you're waiting for your phone to ring; when it rings you'll answer it; it's OK for me to interrupt you; you're happy to talk to me; whatever you're doing is less important than talking to me; and I won't take no for an answer—I'm going to keep calling back until you talk to me.

That was the reality of telephone usage in the early 1980s. The current experience, he said, is quite different:

In fact, you don't have a home phone; your number can ring anywhere in the world; you're not waiting for your phone to ring; nobody calls you on the phone anyway they text you or IM you; when your phone rings, you don't answer it—your time is precious, you have competing interests, you resent calls from strangers, you're on one or more Do-Not-Call lists, and 20 minutes [the length of many pollsters' interviews] is an eternity.

Two measurable trends illustrate Leve's narrative. The first involves the increasing number who have abandoned landline service for cell phones. According to in-person interviews conducted by the Centers for Disease Control, the percentage of "cell phone only" Americans has grown from the low single digits to 47.7% of all adults in just 12 years. Another 16.1% of adults have both a landline and cell phone, but answer all or almost all calls on wireless phones.

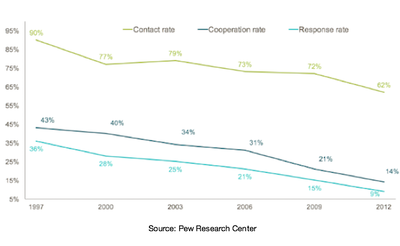

The second trend traces the number of Americans now effectively out of reach of survey calls. According to the Pew Research Center, the typical response rate for their surveys has "fallen dramatically," from 36 to 9% between 1997 and 2012, and only part of the decline was about Americans hanging up on pollsters. Pew reported its typical contact rate had fallen to just 62% in 2012, meaning that more than a third of those called never answered the ringing phone.

Yet neither of these trends alone spell the demise of polling or decline in polling accuracy. Despite the lower response rates, the Pew report concluded, "telephone surveys that include landlines and cell phones and are weighted to match the demographic composition of the population continue to provide accurate data on most political, social and economic measures."

And therein lies a silver lining: As technology has steadily eroded some of the critical assumptions of random sampling, pollsters have been applying the science of survey research to learning, just as steadily, how to correct for the increasing statistical flaws in their unweighted data.

Today, in an eerie parallel to the early days of straw polls and quota sampling, better educated people tend to be more available and willing to do surveys than the less well-educated. But unlike those early days, pollsters have developed increasingly powerful tools to statistically adjust (or weight) their data in ways the early polling pioneers could only dream of.

The declines in response rates have not made telephone polls obsolete or inaccurate, just far more expensive and harder to conduct. All pollsters, whether they begin by attempting to contact random samples or draw upon alternative sources as we do at SurveyMonkey, must use statistical adjustment and modeling to remove the bias from raw data.

So the new era in polling now underway may be characterized less by any particular means of collecting data and more by the underlying approach of rendering that data as reliable and representative.

Another moment of reinvention is underway. Six years ago, when I interviewed Jay Leve for the National Journal, he said he "doesn't look to the future with despair but with wonder" at the opportunities for the polling profession.

We agree.