Self-driving cars are a hot topic. They were the talk of the recent Consumer Electronics Show with several companies vying to get into the business of this and other artificial intelligence based technologies. There are some promising opportunities for exciting new industries in the next decade. But as with anything else, this type of AI sans the human components of reason, empathy and plain common sense, bear some consideration as we look for ways to integrate these innovations into our daily lives.

Imagine yourself seated (not driving) in your self-driving car casually video chatting with a family member while cruising at 50 miles per hour. Suddenly, a deer from a nearby forest jumps in front of your car. The car's brain fed by all the sensors registers the information and calculates the probability of a collision. Unfortunately, the odds are 100 percent that your car does not have enough time to stop and the collision with the deer is inevitable.

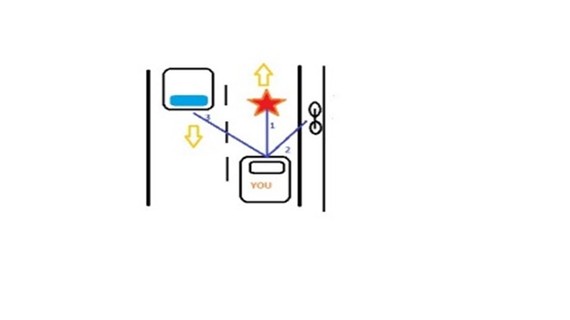

The car's sensors are also monitoring the wider environment. On the right side, a cyclist is using a designated cycling lane. Another car is coming in the opposite direction. This rough sketch should give you a sense of the setting.

Option one: The car stays on its track, brakes as hard as possible and collides with the deer. The brain calculates that your chance of a serious injury or being killed given the impact with the 400lb animal is extremely high even if all the occupants are wearing seat belts and the car has air bags. The animal will most certainly be killed and your vehicle would sustain serious damage.

Option two: The car swerves to the right, brakes as hard as possible but collides with the cyclist. Your chance of survival is 100 percent (albeit with some possible injuries), however there is a 100 percent probability that the cyclist will be seriously injured or killed.

Option three: The car veers to the left, brakes as hard as possible and collides with the oncoming car. In that case your probability of being seriously injured or killed is 30 percent. The same probability applies to the passenger of the other car.

So, the question is: what will the car's brain decide? Well, the answer is: it depends on the metric that the brain has been trained to optimize against.

If the optimization function is "save the life of the passenger(s) of the car", the car will go right and hit the cyclist. The accident may result in the loss or serious injury of one human life. However, if the optimization function is "minimize overall loss of lives", the brain will sway the car left hitting the oncoming car and resulting in a cumulated probability of 60 percent of serious injury or death.

Clearly there are no easy answers and objectivists as well as subjectivists will likely find my calculation of the cumulative possibly flawed. But the intent here is to simplify the probabilistic part to the extreme to articulate my point about the optimization metrics.

The point of this simple example is to illustrate a very complex ethical question that technology is raising. Alan Turing, the mathematician who laid the foundation for artificial intelligence and was part of the team that broke the Enigma code during World War II was faced with this same challenge. React immediately to potential enemy attacks thereby saving some lives but revealing the code breaking knowledge to the enemy, or hold back this data for the greater cause of winning the war?

The scenarios for how such smart technologies are impacting our lives are potentially daunting and this is where the human element of empathy and common sense cannot be replaced by machines, even those with the most sophisticated of intelligence programming. Maybe it is not so surprising that Elon Musk is warning us against the long term impact of Artificial Intelligence (AI). He recently donated $10 million to the Future of Life Institute whose mission is to keep AI beneficial.

The self-driving car is an exciting new development that opens up worlds of opportunity, especially to those who have physical handicaps and need practical transportation for a fulfilling life. But let's keep common sense in our thinking as we determine how such technologies are best employed.

Getting back to our driverless car example - it's not clear how the driver's innate common sense and empathy would react in that situation or the resulting impact. There could be any number of outcomes depending on the reflexes of the driver or the person's choice to override the car's "brain". It is also possible that the driver has already heeded a warning sign about deer, and as a result, has reduced speed and increased observation of the surrounding area, thus avoiding the situation altogether.