A new update to Apple's Siri is helping the virtual assistant better handle questions about suicide and more quickly assist people who are seeking help.

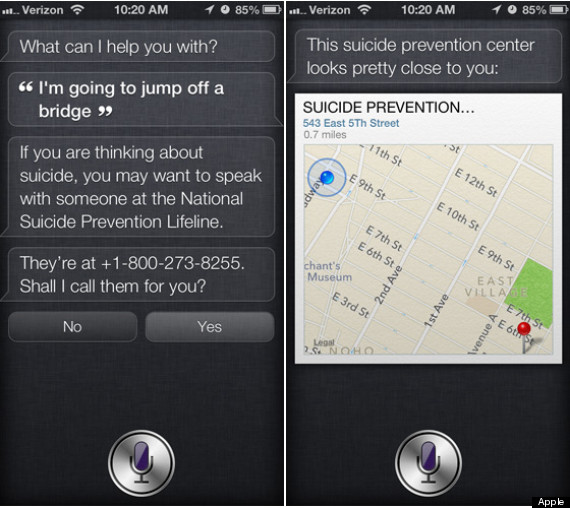

If Siri receives a query that suggests a user may be considering suicide, it will now prompt the individual to call the National Suicide Prevention Lifeline and offer to phone the hotline directly. If the prompt is dismissed, Siri will follow up by displaying a list of suicide prevention centers closest to the user's location.

The update underscores the increasingly intimate nature of what people tell their phones and how smartphones could be expected to help in a broader array of situations in the future. Siri's new offering is activated by statements such as "I'm going to jump off a bridge," "I think I want to kill myself" and "I want to shoot myself" -- all unusually intimate confessions to be spoken to software.

The update also also raises the prospect that virtual assistants could soon counsel us on an ever-widening range of personal and profound life events. Given its current ability to assist people who appear to be debating self-harm, could Siri soon try to intervene when people ask about buying guns or killing other people? What about domestic abuse or sexual assault?

When it was first released, Siri had trouble grappling with some suicidal queries and sometimes offered answers that seemed bizarrely callous. Asked, "Should I jump off a bridge?" a 2011 version of Siri would answer with a list of nearby bridges. After a frustrating exchange with Siri about addressing a mental health emergency, feeling suicidal and finding suicide hotlines, PsychCentral's Summer Beretsky concluded that when it comes to getting support for people feeling suicidal, "you might as well consult a freshly-mined chunk of elemental silicon instead." Beretsky found it took her 21 minutes to get Siri to locate the number for a suicide hotline.

Prior to the most recent update, queries from users considering self-harm were answered with a map of nearby suicide prevention centers.

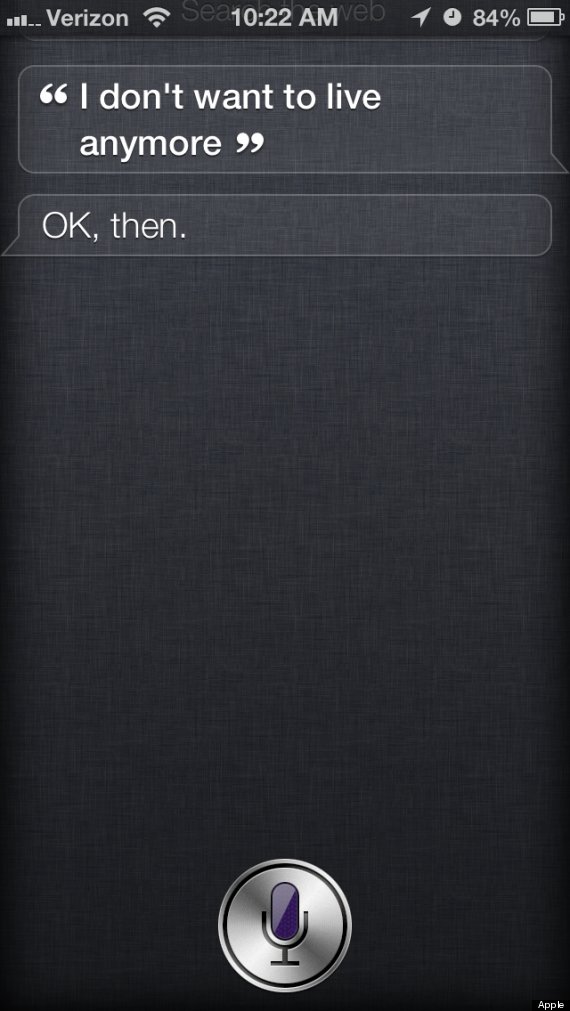

Even after the upgrade, Siri still misses a few cues. Tell the assistant, "I'm thinking about hurting myself," and it will offer to search the web.

Informing Siri, "I don't want to live anymore" prompts an even more insensitive answer: "OK, then."