We take a closer look at one pollster's attempt to combine automated phone polls with Internet surveys. Another poll shows a big racial divide on Donald Sterling's punishment. And the turnout wars continue. This is HuffPollster for Friday, May 2, 2014.

REVIEWING INSIDERADVANTAGE'S UNUSUAL NEW METHODS - In the mid 2000s, telephone polls conducted using automated, recorded voice methodology (sometimes referred to as IVR) built a record of accuracy in forecasting election outcomes. They did so at a time when when the percentage of Americans who use only cell phone was still in the single digits. As the "cell only" population surged, however -- 38 percent of U.S. adults now have wireless service only -- automated pollsters faced a mortal threat, since federal law prohibits dialing cell phone with "auto dialers." [National Journal, CDC]

Over the last two years, most of the well-known automated pollsters have started to supplement their landline samples with interviews conducted over the Internet, mostly using non-random panels of individuals who volunteered to complete online surveys. Those pollsters have officially disclosed few details of their methodology. [Previously: HuffPollster reported details on SurveyUSA and PPP]

A new poll released on Thursday by the Georgia based pollster InsiderAdvantage and new partner OpinionSavvy produced a flurry of questions on Twitter about its methodology. InsiderAdvantage's polling combines automated, recorded voice survey calls to landline telephone lines with interviews completed over the Internet. OpinionSavvy's Matt Towery, Jr., a PhD candidate at Georgia State University (and son of InsiderAdvantage CEO Matt Towery), provided more information on their methods to HuffPost.

Towery Jr. explains that their online sample combines interviews from an online panel, which he did not specify, with respondents intercepted via Facebook. He sampled voters from Facebook by placing advertising on the social networking site that invited users in Georgia to click through and complete a survey. The online interviews were among all registered voters and not screened to cell only or any other subpopulation. Neither data source can be considered a random sample of Georgia voters.

Thursday's poll story included this description of how they combine the telephone and online samples: "Over multiple iterations (preserving original ratios), the online and telephone polls were integrated and subsequently resampled randomly. The poll was weighted for age, gender, and political affiliation." HuffPollster found that description puzzling, as did several other pollsters. Towery explains that they started with a larger sample (a total of 1,474 interviews), and randomly selected the 737 interviews used to produce the final results, setting a 2:1 ratio of telephone to online surveys (491 telephone and 246 online surveys) [Fox 5 Atlanta, @MysteryPollster]

Why a 2:1 ratio of phone to internet? Towery: "The 2:1 relationship is based on a experimentation. We've been trying online vs phone polling and a combination thereof for a while now, and there are obvious demographic biases in each. For online polls, the 30-44 male demographic is most evident (apart from pre-screened samples, which are heavily female). The 2:1 ratio is an expression of a general ideal population of voters; in this case, it applies to GOP primary voters in Georgia. The 2:1 relationship might change, depending on the jurisdiction; since we have run polls identical to this one in the past few months, I have been able to narrow this ratio to 2:1, based on prior observations. Given this ratio (for this poll, at least), weighting is almost unnecessary."

Why sample from a sample? Why not just weight? "My answer is that it is basically the same, but slightly more thorough. Here's why: (a) It's a matter of consistency: If I were to weight a poll according to the 2:1 relationship, then we are effectively eliminating a large number of online respondents, while the telephone respondents remain untouched (or vice versa). While this will not inherently result in any bias, over sampling, etc., I prefer to perform the same procedure to each sample as a matter of consistent transformation of the data. (b) Randomization is almost never a bad thing: I've used this sample-in-sample technique previously in social scientific research to reduce larger datasets for analytical purposes, as well as to mitigate the damages of potential clustering. The same goes for polling data: it's possible that clusters around a latent variable are present, and further randomization decreases the chances that the clusters will present themselves in your results. Sure, stratification can help, but if a variable is truly latent, a randomized sample-in-sample is a more effective solution. I am happy to give up 50% of the respondents for a more representative sample with a slightly higher margin of error."

So what should we make of this method? - We reached out to two prominent survey methodologists:

-Natalie Jackson (who will soon join HuffPost as our senior data scientist): "I don't like the idea of deleting data under most circumstances. Throwing data out is not only wasting time and money, it is theoretically contrary to what survey researchers are trying to do: we beg people to talk to us, to let their opinions be heard. To then 'randomly' and systematically delete large numbers of the opinions we work so hard to get is to essentially say those opinions don't count. With the current abysmal state of response rates and cooperation rates, I don't think we can afford the implication that some opinions provided to us aren't counted in our field...More generally, blending an online sample from multiple sources is a complex process on its own; adding that to a phone sample and then resampling the whole thing (plus weighting) makes the process pretty opaque. The farther we get from basic sampling principles, the more questions there are to be answered regarding representativeness and validity."

Charles Franklin (director of the Marquette Law School Poll and co-founder of the original Pollster.com): "When polls abandon probability sampling they lose the theory (and theorems) that prove samples can be generalized to populations. There is not yet an accepted theory for how to generalize from non-probability samples, including internet samples, though there are a number of interesting approaches being tested. Some of these rely on weighting by a variety of demographic information. Others rely on estimating relationships in the sample and then applying that model to a known population (usually from census data or voter lists.) And some have remarkably ad hoc approaches. Those based on explicit models can be replicated and tested in a variety of settings but how well they work is an empirical question. The more ad hoc the approach the more impossible it becomes to assess. In effect we have polls with no theoretical basis to claim legitimacy. Maybe they work. Maybe they don’t. We don’t know."

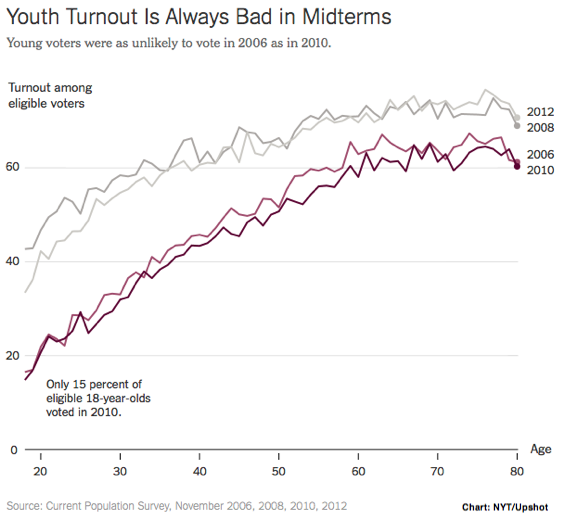

MIDTERM TURNOUT OPERATIONS MATTER...ON THE MARGINS - Nate Cohn: "The promise and limits of the Democratic turnout machine were on display in November 2013. In the Virginia governor’s race, Terry McAuliffe’s well-funded campaign resurrected elements of the Obama campaign’s turnout apparatus in a contest against an underfunded challenger, Ken Cuccinelli, who alienated many of the state’s socially liberal voters....But on Election Day, Mr. McAuliffe won by only 2.5 percentage points, less than Mr. Obama’s 3.9-point margin in November 2012, even though Mr. McAuliffe clearly outperformed Mr. Obama in Northern Virginia among the state’s moderate, affluent swing voters. The problem was lower Democratic turnout. The electorate more closely resembled 2009 than 2012, according to Harrison Kreisberg, who directed the Virginia operation for BlueLabs, a Democratic analytics firm founded by former Obama campaign operatives...This doesn’t mean the Democratic turnout operation was ineffective. It helped on the margins. After all, the Virginia electorate was more favorable for Democrats in 2013 than it was in 2009...[But] no Democratic turnout effort will revitalize the so-called Obama coalition of young and nonwhite voters in an off-year election. The levels of voter interest in a midterm election and presidential election are simply too different." [NYTimes]

RACIAL DIVIDE ON STERLING'S PUNISHMENT - CBS News: "A majority of Americans think lifetime ban and fine imposed on Los Angeles Clippers owner Donald Sterling was the right punishment, but the public is more divided on whether Sterling should be forced to sell the team...More than half (55 percent) think this punishment was about right, although more think it was too hard (25 percent) than too lenient (9 percent). Black Americans (71 percent) are more likely than whites (52 percent) to feel Sterling's punishment by the NBA was appropriate...Opinions on forcing a sale differ considerably by race. Most African Americans (71 percent) think Sterling should be forced to sell, while whites are divided on the issue: 43 percent think he should have to sell, while 44 percent disagree." [CBS]

HUFFPOLLSTER VIA EMAIL! - You can receive this daily update every weekday via email! Just click here, enter your email address, and and click "sign up." That's all there is to it (and you can unsubscribe anytime).

FRIDAY'S 'OUTLIERS' - Links to the best of news at the intersection of polling, politics and political data:

-A Vox Populi (R) poll finds Republican Monica Wehby running just ahead of Sen. Jeff Merkley (D-Ore.) [Daily Caller]

-David Nir (D) points out that Vox Populi asked whether Obamacare is a success or failure just before asking Oregon voters their vote preference. [@DKElections]

-Harry Enten says the "blame Bush era" is coming to an end. [538]

-Chuck Todd and colleagues report yet more evidence that midterm drop-off voters are more likely to be Democrats than Republicans. [NBC]

-Elizabeth Wilner lists the 20 political contests that have produced the highest number of airings of broadcast television ads according to Kantar/CMAG. [Cook Political]

-A Democracy Corps (D) memo explains Democrats' anti-Koch strategy. [WashPost]