Here's the dirty little secret about diet research. Most of it sucks.

There, I've said it.

Now I'll show you why.

To do so, we have to briefly -- and, I promise, painlessly -- discuss one or two fundamentals of research design. Only then can you truly know how misleading, inadequate, often irrelevant and sometimes dangerous much of the nutrition research you hear about in the news truly is.

How to do a randomized, controlled study.

Let's say I'm a drug company and I want to find out if the new blood pressure drug my company has been working on actually lowers blood pressure in humans. So I design the following study: I take a group of people. I make sure they are as "identical" as people can be -- i.e. "30-year-old non-smoking men from the Northeast with no previous health issues but moderately high blood pressure." In other words I match the subjects for age, sex, medical history and so on, all the things that could likely skewer the results, or at least all the things I can think of. I don't really care about how these folks might be different in terms of their television viewing habits, or if they differ in how much they like iPhones, but I do want to make sure these people are similar on any measure that could likely affect blood pressure, so I make sure they're all non-smokers, not overweight, don't have previously existing heart disease, have the same level of stress, aren't taking any other medications, and anything else I can think of. (If you're thinking this is pretty hard to do, you're right, it's next to impossible, but it's the research "ideal," and people who get most of it right publish better research than those who get less of it right.)

So let's agree that what we're trying to do here is "match" our subjects, to make sure they're as similar as possible, like a human equivalent to lab rats with identical genes bred in an identical environment. Yes, yes, I know it's impossible, but you need to understand why that's the goal, why "sameness" of subjects is important. And it's because of what we're about to do next.

Which is to randomly assign these very similar subjects to one of two groups.

For the length of the study, both groups live identical lives, eat identical food, sleep identical hours -- but with one exception and one exception only: Group one gets the blood pressure medication while group two gets a placebo (basically an empty pill).

If there were any significant differences between the two matched groups in actual blood pressure -- like if the blood pressure medicine group had significantly lower blood pressure at the end of the study than the placebo group -- we'd have a darn good reason to assume that the blood pressure med was the cause. We had tested the hypothesis that "this blood pressure medication lowers blood pressure, better than what could be predicted by chance," and, in the case of this hypothetical study, we confirmed the hypothesis. It did indeed perform as hoped.

Now let me tell you how most of the studies involving diet that you hear about in the media resemble that study about as much as West Virginia resembles West Hollywood.

Enter Epidemiology

The vast majority of the studies that make it to the mainstream media are epidemiological studies, which work like this: You take a lot of data from large populations and then you see what things go with what things. You notice, for example, that in countries where they eat a lot of fiber, there is less incidence of colon cancer. Or that people who have lower levels of vitamin D tend to have higher rates of MS. Or that diabetes incidence exploded upward under the Clinton administration. Or that people who eat more saturated fat have higher total cholesterol. (Whether these correlations matter at all -- and what they actually mean, if anything-- is a topic for a different day. Now we're just talking about the data, not whether or not they're clinically important.)

So epidemiology is terrific for observing things, noticing what's found together, and for its prime purpose, which is to generate hypotheses. The idea that smoking causes lung cancer came out of epidemiology. Epidemiologists noticed consistently higher levels of lung cancer among smokers, which was an interesting observation but only because this repeated observation led to the hypothesis that cigarette smoking causes lung cancer. And that hypothesis was then tested in a rigorous way, time and time and time again in study after study around which (unlike cholesterol) there is little controversy, and it is considered to be true that cigarettes wildly increase your risk for lung cancer.

But here's what happens with epidemiology and diet studies.

Data will show that, for example, over a period of 25 years, saturated fat consumption went up in a population and so did cholesterol. Now, that should generate a hypothesis -- i.e. that saturated fat consumption raises cholesterol. That hypothesis can now be tested clinically in a variety of settings (see the blood pressure medication example, above).

(And we would probably find that saturated fat consumption does raise serum cholesterol but by raising HDL and the harmless LDL-A particles while lowering the harmful LDL-B particles, ultimately improving your lipid profile! But I digress.)

The point is that the epidemiological observation generates something that can now be tested.

But that's not what happens.

What happens is that these observational studies become the basis of health policy. They don't generate hypothesis that can be tested and either proven or disproven, they generate the assumption of cause and effect, which is reinforced by the media, and becomes the basis of public health policy.

"Egg eaters have higher rates of suicide, study finds"

Take the made-up headline, "Egg eaters have higher rate of suicide, study finds." Stuff like this comes out every single day. (I'm just waiting for the inevitable CNN story on how "higher intakes of saturated fat" are "associated" with "higher rates of gang violence." Even if you were absent for critical thinking 101 in school, you should immediately see the problems with this kind of association study.)

First of all there are zillions of variables, gazillions of associations. Is saturated fat, for example, a "marker" for the western diet? And what else is in that western diet? Is saturated fat consumption in a country a "marker" for more wealthy nations, and if so, what else is going on in those wealthy nations? More stress? More tobacco? More pollution? Less sleep? Less fiber? Who knows? It would take a computer 12 times the size of the legendary IBM Big Blue to sort out all the confounding variables, things that could account for the associations observed.

Here's a famous example of how this works, taught in every statistics class: Yellow Finger Syndrome.

Yellow Finger Syndrome

There is a statistically significant positive correlation between a noticeable yellowing on the fingertips and lung cancer. For years, those with a strange yellowing on their fingertips developed lung cancer at a much higher rate than those who did not have yellowish fingertips. Beginning statistics students were taught this association to illustrate the concept of a confounding variable. The confounding variable in this case is smoking. Smoking is associated with both lung cancer and with yellow fingers. Yellow fingers don't cause lung cancer, even though they are frequently found together (correlated).

Researchers love to think they're very sophisticated, and have all kinds of statistical magic to perform on the data to rule out this kind of "confounding." I think they're overly optimistic. I've seen association studies miss the most obvious connections and fail to account for many other plausible ones. There's also a good deal of confirmation bias in research as well -- people frequently find what they look for and find what they expect to find, paying close attention to any correlations that support their hypothesis and throwing out the many that don't.

The fabulous punch line you've all been waiting for. There are very few writers in the health-and-wellness space that I admire more than Denise Minger. No one I know of can debunk a study better, all the more remarkable because she does it with the kind of style and wit and writing chops rarely seen outside of the essays of Merrill Markoe. And she does all this armed with nothing but absolutely iron clad data, which she is happy to show you.

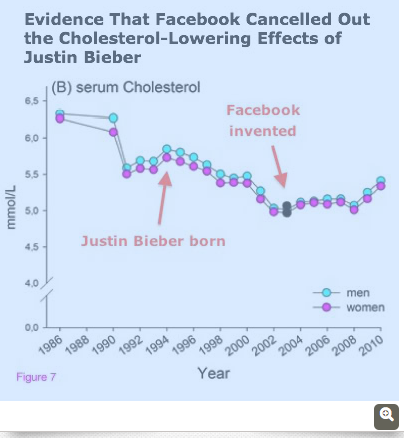

In a recent trip around Internet-land, I came across this chart she had done a couple of years ago in writing about something like what I'm writing about today -- the craziness of making assumptions and health policy -- from epidemiological, observational studies.

I'll let the graph speak for itself. Seems to me it's perfect evidence that Facebook has been really bad for cholesterol levels. And since we already "know" cholesterol causes heart disease, seems an open and shut prescription.

Wanna wipe out heart disease? Shut down Facebook!

I'll let you enjoy this little masterpiece from Denise Minger without further comment from me.

After all, none Is needed.

For more by Dr. Jonny Bowden, click here.

For more on diet and nutrition, click here.