I experienced a sinking feeling, a mix of fear, futility and recognition, when I first saw the images generated by Google's artificial neural net, now dubbed Deep Dream.

Since the release of both downloadable Deep Dream code, and apps for online image-processing if you don't mind the wait, users seem to have gotten used to the Deep Dream AI very quickly, as we get used to many novel sensations, moving with an historically unprecedented speed from amazement, to familiarity, to contempt. I'd like to argue here that this accelerated indifference cycle should be suspended a little bit with regard to Google's AI.

In his novel Solaris, Stanislaw Lem details the strange properties of an active ocean-planet: "Du Haart was the first to have the audacity to maintain that the ocean possessed a consciousness. The problem, which the methodologists hastened to dub metaphysical, provoked all kinds of arguments and discussions. Was it possible for thought to exist without consciousness? Could one, in any case, apply the word thought to the processes observed in the ocean?"

The ocean mimics human objects, and ultimately synthesizes artificial humans. But all attempts at explicit communication end in failure, and the question of the consciousness of the ocean remains unresolved.

The Turing test for artificial intelligence asks if a machine can answer questions in such a way that it passes for human. Lem's model, contrarily, asserts that the equivalence of "human" and "intelligence" is too limited an approach to the problem of non-human intelligence. He proposes that any truly alien intelligence will remain so profoundly incomprehensible that the most we may conclude about it is that its behavior seems not to arise from nature alone.

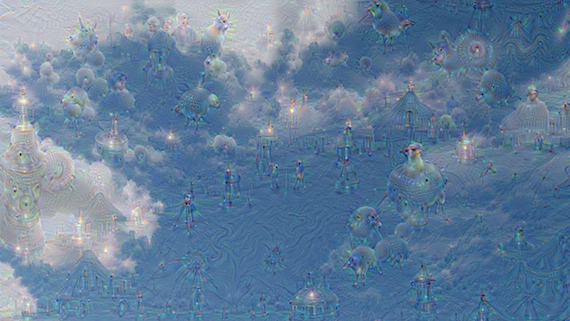

We will return to Lem's idea. First let's have a look at an image. Here, Google researchers fed the AI a picture of a partly cloudy sky, and instructed it to look for objects familiar to it from the thousands of other images it had been fed before. Upon finding these hints of objects, the AI was to enhance them. The output was fed back in repeatedly, until the image was transformed:

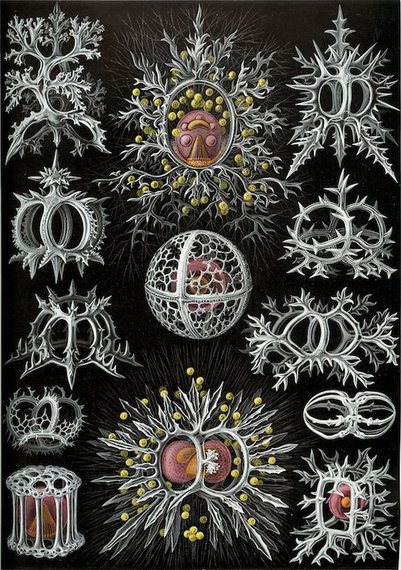

The blue sky and white clouds have been churned into a soup of fragmentary images. Half-visible hybrids of buildings and animals abound, resembling Bosch's monsters in a way. But the AI does not fill the plane as Bosch tends to do. Rather, it makes small things upon a terrible vastness, as in Dali's landscapes. The individual objects are not Boschian in texture; they resemble colonial organisms like Volvox, or light rainbowed by diffraction gratings. Are they like Ernst Haeckel's catalogues of radiolarians then? Not at all, and here we start to get to the nature of the thing. Haeckel's work is tense with effort, straining as he does to mold his human sense of shape and detail to the specimens before his eye.

a plate from Ernst Haeckel's Kunstformen der Natur (1899), showing radiolarians of the order Stephoidea

The AI's rendering of its creations, by contrast, looks effortless. They have nothing to do with Bosch, Dali, nature, or Haeckel.

And yet they have an awful sense of familiarity. They have the feel to them of the downside of an acid trip, when you cannot get things straight before your eye, and a profusion of useless complexities twinkles in and out of being. But this experience too, the fading acid trip, itself has an awful familiarity. I believe we recognize it because it is an explicit rendering of an implicit awareness: an awareness of the ugly mechanics of sight, which generates limitless hints of images -- false matches -- as it moves from retina to consciousness. Our awareness of these false matches is ordinarily suppressed by the machinery of mind, lest our surface consciousness find the world impossible to navigate -- lest we be blinded by garbage data.

In his Treatise on Painting, Da Vinci gave the following advice for a controlled method of partly peeling back this suppression, as a creative method:

...it would not be too much of an effort to pause sometimes to look into these stains on walls, the ashes from the fire, the clouds, the mud, or other similar places. If these are well contemplated, you will find fantastic inventions that awaken the genius of the painter to new inventions, such as compositions of battles, and men, as well as diverse composition of landscapes, and monstrous things, as devils and the like. These will do you well because they will awaken genius with this jumble of things.

Acid, by contrast, strips away that suppression altogether, and we confront directly and without mercy the "jumble of things" our sight is made of.

The Google AI apparently has 30 layers of visual processing, modeled closely on the neurology of sight, which moves up a hierarchy of processing from contrast-detection, to line detection, to shape detection, to shape recognition and so forth until we see what we see. So does the AI imagery derive its hideous quality from this familiarity, from the fearful certainty that we are watching our own cognitive vivisection?

Maybe.

I have heard a serious argument made that what we experience as mind is largely a byproduct of visual processing; that the sheer utility of sight to the survival of some ancestor animal stimulated the development of ever-more-powerful visual processors, so that sight gradually crowded out the other senses in the amount of brain capability it demanded, and in the end, the eye led to the mind.

This possibility casts the question of the Google AI in a rather different light.

To my eye, the images coming from Deep Dream represent the first true instances of AI aesthetics. They have an irregular quirkiness, only one quality of which is the effortless rendering of forms vastly more detailed than the human hand would ever dare attempt.

Here is an image more radical than the previous ones. The publicly available code seems to be based on the Google AI trained with animal images, including disproportionately many dogs. Google has a second acknowledged AI which I haven't seen floating around in public, educated on architecture. When provided with mere noise, it was asked to find an image in it.

We see here analogues to Escher's fluid concept of space, in which the hulk of a tower might become a shadowed hallway, and a building might flicker between high and low perspectives. But the terraced curves of the landscape belong to the AI, as do the stutter-fractals: pieces of fractal appear and disappear without settling into a mechanical reliability. Thus the AI aesthetic has neither the deterministic math aesthetic of the fractal, nor the copy-paste-paste-paste machine aesthetic of so much electronic music. It has its own unpredictable integrity.

Here is one last image, generated again from noise:

There is mood in this impossible façade. A crazily prismatic sunshine falls upon it, but all its entrances terminate in darkness. It is nearly symmetrical and rhythmic in its variations of scale -- it is physically impossible and complex beyond reckoning -- it is laden with good cheer and menace. This is what it is to me, because I respond to it as I do to artwork -- as I do to things with intentional meaning. But not all things with aesthetics have intentional meaning. They are not all artwork.

What is this thing?

Consider a race of beautiful men, made out of rose gold and titanium, born able to draw Fourier transforms more easily than Giotto drew his famous circle. Would such men make the pictures we would make had we but the skill and brains and a spare millennium? Would their art be uncannily familiar because it is our own minds made clear at last, or would it be fundamentally alien? Could we expect to speak to such men, and they to us?

Would we lose heart in our own art, labored and ill-figured as it is? Would we come to resemble that band of miserable Neanderthals, that once camped next to some Cro Magnons, and made clumsy imitations of the miraculous Cro Magnon tools and decorations, so that the archaeological record of their adjacent camps is overpowering in its pathos, in its story of one species rising and another nearing its end?

I think we should not get so comfortable with Deep Dream quite yet, because I think we are, at long last, facing the very beginning of this long-anticipated set of problems. I see many people gleefully using the Deep Dream app, the way they might use a fresh filter in Photoshop. Some are good at it, others less so. But I am not convinced they are using a tool so much as collaborating with a faceless partner. The current version of the AI is almost comically circumscribed compared with its clear potential. Only a comedian of an ancient Greek degree of cruelty would propose limiting a tremendous mind to seeking parts of dogs and lobsters in snapshots uploaded by a mob.

As for the later pictures we studied, the ones generated from no image at all -- are we looking at art? Were they made by a mind? Was there intent? Clearly not, we should like to reassure ourselves. It is true that a neural net created this, a net the workings of which are opaque to its own creators. But surely, we would claim, it has no consciousness. Lem's question recurs: "Was it possible for thought to exist without consciousness?" This is important -- it could not be more important; important to us not only as art lovers, but as participants in the still-unfolding story of intelligence. We appear to be without a map and far from shore in the Turing archipelago.

---

SOURCES AND RECOMMENDED READING:

Ernst Haeckel image:

https://commons.wikimedia.org/wiki/File:Haeckel_Stephoidea.jpg

Google blog account of the project:

http://googleresearch.blogspot.co.uk/2015/06/inceptionism-going-deeper-into-neural.html

Turing test (original paper, proposing):

http://loebner.net/Prizef/TuringArticle.html

Vision and Art: The Biology of Seeing, by Margaret Livingstone

http://www.amazon.com/Vision-Updated-Expanded-Margaret-Livingstone/dp/1419706926/