WASHINGTON -- A modest experiment conducted by a Republican pollster found that just prior to last year's Delaware Republican primary, an automated, or "robo," telephone poll sampled more self-described conservatives than an otherwise identical parallel survey that used live interviewers. The finding provides support for a suspicion held by many poll observers: that when other aspects of methodology are held constant, automated surveys tend to skew toward respondents with stronger opinions.

The experiment, conducted by Republican pollster Jan van Lohuizen, was described last month in an article) co-authored with University of Santa Catarina Professor Robert Wayne Samohyl that appeared in the online publication Survey Practice. The article describes two surveys that van Lohuizen conducted among likely Republican primary voters in Delaware one week prior to Christine O'Donnell's stunning upset of Rep. Mike Castle.

Van Lohuizen was Castle's pollster and he fielded both polls from Sept. 7-8, interviewing 400 likely Republican primary voters using live interviewers and another 300 with an automated methodology. The Castle campaign sponsored the live interviewer survey, and van Lohuizen covered the cost of the automated poll. Both were relatively short "tracking" surveys that asked just a handful of substantive questions.

Thus, the population, sample design, survey questions and timing were virtually identical. The key difference was that one survey used live interviewers and the other was automated.

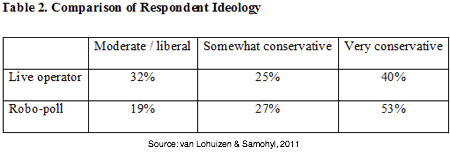

That distinction that yielded very different respondents: The live interviewer survey obtained a response rate of 23 percent, compared to just 9 percent for the automated poll. According to van Lohuizen, the respondents to the automated survey were older, and as documented in the paper, 15 percentage points more likely than the live interviewer respondents to describe their political views as conservative (80 percent vs. 65 percent).

This study has some obvious limitations. First, as van Lohuizen and Samohyl point out, they surveyed only Republican primary voters. Their theory is that respondents to automated polls tend to be more opinionated. Thus, as they explain, "a parallel experiment conducted with Democratic primary voters might find that participants in robo-polls are more liberal than participants in live operator surveys."

Second, the two experimental surveys were not weighted to bring them into demographic alignment. Most pre-election surveys do weight by variables like age, gender and race to correct for skews produced by non-responses. It is an open question whether, in this case, weighting up the younger respondents on the automated survey would have reduced the percentage of conservatives. Still, the larger point remains: A smaller, more conservative group of Republicans chose to participate in the automated survey.

Finally, automated pollsters sometimes argue that this sort of non-response "bias" actually makes their surveys more accurate, because the respondents who opt out of the survey by hanging up also tend to be non-voters. Some also argue that non-voters are more likely to answer screen questions more honestly without a live interviewer involved.

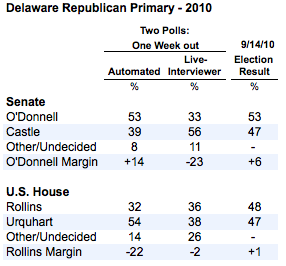

So which method produced a more accurate election forecast in this case? The robo survey had O'Donnell ahead by 14 points (53 percent to 39 percent), while the live interviewer survey had Castle leading by 23 (56 percent to 33 percent). Since O'Donnell upset Castle by six point (53 percent to 47 percent), the robo survey was closer, but keep in mind that both surveys were conducted a week before the primary, before O'Donnell was endorsed by Sarah Palin, Sen. Jim DeMint (R-S.C.) and the National Rifle Association. The true standing a week earlier was likely different than what transpired on Election Day.

Perhaps more useful is the question asked by both surveys on the race for U.S. House, which received nowhere near as much attention, both in Delaware and elsewhere. On that score, the live interviewer survey was much closer, showing Glen Urquhart leading Michelle Rollins by just two percentage points (38 percent to 36 percent). Urquhart, the more conservative of the two candidates, won by less than a single percentage point (48 percent to 47 percent with rounding). But the robo-survey conducted a week earlier showed Urquhart leading Rollins by 22 points (54 percent to 32 percent).

So both results are consistent with the finding that the automated survey overstated the number of self-described conservatives: It had O'Donnell ahead by roughly twice her final margin just before an avalanche of endorsements and media attention and showed the more conservative House candidate leading by a landslide when he ultimately won by just a single percentage point.

Van Lohuizen and Samohyl also used a regression analysis to assess the results of 624 national polls measuring President Obama's job approval ratings between November 2008 and April 2010. They found a result consistent with our own observations -- namely, that automated and internet polls produce a consistently higher disapproval percentages and a consistently lower undecided number.

This aspect of their analysis has two limitations. First, virtually all of the "robo" polls they examined (161 of 176, or 92 percent) were conducted by one organization, Rasmussen Reports. Second, they overlook the possibility that the differences may also result from a slightly different job approval question (more on that here).

But the limits of trying to draw conclusions from public surveys underscores the importance of experimental studies like the one they conducted in Delaware. Given the sheer number of automated surveys released in election years, we need far more of this sort of research, and the sooner, the better.