This post was written in collaboration with Randy Gollub, Jeanette Mumford, Panthea Heydari, Leonardo Fernandino, and Cyril Pernet, as well as was approved by the entire Communications Committee and the Council of the Organization for Human Brain Mapping.

Scientific publications generate a written conversation that evolves over time. Each new publication expands on the conversational foundation established by its predecessors. Despite our positive view that a recent study by Eklund and colleagues makes a valuable contribution to the conversation in our field of brain mapping, many reports have interpreted that findings from this paper cast doubt on all studies conducted with functional magnetic resonance imaging (fMRI) over the last two decades. We hope to dispel some of the misconceptions.

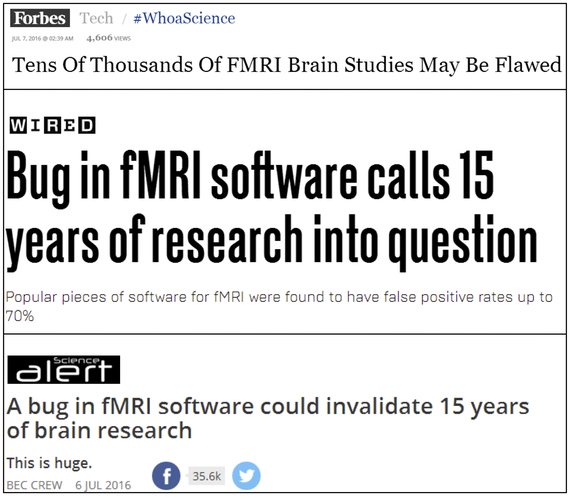

You may have read some of these reports, which often repeated convincing figures like "false positive rates up to 70 percent" or "Tens of Thousands of FMRI Brain Studies May Be Flawed" to create doubt and undermine confidence in fMRI. Where did these numbers come from? Well, a major issue that all brain imagers must deal with is determining the reliability of the detected brain activation. Since the detection is made via statistical techniques, there is always a chance that what is determined to be an activation, may in fact be a "false positive". Researchers use methods that control the chance of a false positive within their measurements, capped at a predetermined level (usually 5 percent). Controlling false positives, however, can be a tricky business since it often requires certain assumptions to be made. For those of you interested in a more detailed technical explanation, see the blog we wrote for our community. Assessing the risk of false positives is not a new issue and is central in most scientific fields. Since the field of fMRI research is relatively new - barely above the legal drinking age in the U.S. - we are still determining the best way to control false positives. The work by Eklund and colleagues showed that the false positive rate using some analysis techniques can be as high as 70 percent, which is the origin of that figure in the headlines. 40,000 is an estimate of the total number of studies that have used fMRI, or the size of the pool of studies that this work could possibly impact.

Three example headlines reporting that the findings by Eklund and colleagues may invalidate all fMRI studies. Images sources: Forbes, Wired, and Science Alert.

Now that we know what those figures represent, what do they mean and how do they affect the field of neuroimaging? The elegant study by Eklund and colleagues showed that only a certain subset of analysis decisions can give rise to the highest false positive rate of up to 70 percent (instead of the conventional 5 percent). However, not every brain mapper analyzes their data in the same way or uses the approaches examined by the authors. As pointed out by Dr. Nichols, one of the co-authors, on his blog, only ~3500 studies (or less than 10 percent of the ~40,000 existing fMRI studies) used the statistical methods that Eklund and colleagues called into question. So, over ~90 percent of previously published fMRI studies implemented other analysis methods that were not questioned. Further, the findings from all of these 3500 studies are not necessarily false either. Likely only a fraction of the 3500 studies - for example, those with marginally significant results or those not subsequently reproduced - might be called into question.

So, while some sensationalists seem to think differently, there is no need to question every result ever published with fMRI. The new paper by Eklund and colleagues simply demonstrates that particular variants of methods for statistical inference that are sometimes used are not valid. It does not invalidate either (a) the method of fMRI or (b) the majority of the field's findings. Finding meaningful patterns in fMRI data is an on-going effort. As a reflection of the importance of this process, the journal in which the Eklund study was published has agreed to release an erratum clarifying the interpretation of these data - consistent with what is highlighted in the present post. What we are witnessing is a healthy process of science self-correcting. Over time, scientists will learn to identify and fix techniques, and eventually converge upon those that prove to be the most reliable.

By chance, as one of us was editing this article, a group of junior colleagues from a cognitive neuroscience lab were discussing the paper by Eklund and colleagues in the next room. They were considering if and how these findings have implications for their own data analysis methods. And that is the true value of this work: To openly question and continually improve our methods because after all, fMRI remains one of the best tools available to reveal the inner workings of the living human brain.

Kevin S. Weiner is a neuroscientist, as well as member of the Organization for Human Brain Mapping (OHBM) and writes for the Communications/Media Team. The OHBM Media Team brings cutting edge information and research on the human brain to your laptops, desktops and mobile devices in a way that is neurobiologically pleasing. For more information about brain mapping, follow www.humanbrainmapping.org/blog or @OHBMSci_News

Further reading:

A technical comment on the Eklund at al. paper from our OHBM blog.

A technical comment on the Eklund et al. paper by Guillaume Flandin and Karl Friston.

A white paper on best practices in analyzing neuroimaging data.