Brace yourself, Pennsylvanians. The new cut scores for last years Big Standardized Tests have been set, and they are not pretty.

It was only this week the State Board of Education met to accept the recommendations of their Council of Basic Education. Because, yes -- cut scores are set after test results are in, not before. You'll see why shortly.

A source at those meetings passed along some explanation of how all this works. We'll get to the bad news in a minute, but first -- here's how we get there.

How Are Scores Set?

In PA, when it comes to ranking students, we stick with good, old-fashioned Below Basic, Basic, Proficient and Advanced. The cut scores -- the scores that decide where we draw the line between those designations -- come from two groups.

First, we have the Bookmark Participants. The bookmark participants are educators who take a look at the actual test questions and consider the Performance Level Descriptors, a set of guidelines that basically say "A proficient kid can do these following things." These "have been in place since 1999" which doesn't really tell us whether they've ever been revised or not. According to the state's presentation:

By using their content expertise in instruction, curriculum, and the standards, educators made recommendations about items that distinguished between performance levels (eg Basic/Proficient) using the Performance Level Descriptors. When educators came to an item with which students had difficulty, they would place a bookmark on that question.

In other words, this group set dividing lines between levels of proficiency in the way that would kind of make sense -- Advanced students can do X, Y, and Z, while Basic students can at least do X. (It's interesting to note that, as with a classroom test, this approach doesn't really get you a cut score until you fiddle with the proportion of items on the test. In other words, if I have a test that's all items about X, every student gets an 95 percent, but if I have a test that's all Z, only the proficient kids so much as pass. Makes you wonder who decides how much of what to put on the Big Standardized Test and how they decide it.)

Oh, and where do the committee members come from? My friend clarifies:

The cut score panelists were a group that answered an announcement on the Data Recognition website, who were then selected by PDE staff.

DRC is the company that runs testing in PA, so they get to select the folks who will score their work. We also add a couple of outside experts. One of the outside "experts" was from the National Center for the Improvement of Educational Assessment, one more group that thanks the Gates Foundation for support.

This means that everyone in the room for this process is a person who, in case Something Bad shows up, is pre-disposed to believe that the problem couldn't possibly be the test.

But Wait-- That's Not All

But if we set cut scores based on difficulty of various items on the Big Standardized Test, why can't we set cut scores before the test is even given? Why do we wait until after the tests have been administered and scored?

One reason might be that setting the curve after the results are in guarantees failure. Every student in PA could do better than 95 percent, and the Board could still declare, "Those kids who got a measly 95 percent are Below Basic. They suck, their teachers suck, and their school needs to be shut down."

But we should also note that the Bookmark Group is not the end of the line. Their recommendations go on to the Review Committee, and according to the state's explanation

The Review Committee discussed consequences and potential implications associated with the recommendations, such as student and teacher goals, accountability, educator effectiveness, policy impact and development, and resource allocation.

In other words, the Bookmark folks ask, "What's the difference between a Proficient students and a Basic student." The Review Committee asks, "What will the political and budget fall out be if we set the cut scores here?"

It is the review committee that has the last word:

Through this lens, the Review Committee recommended the most appropriate set of cut scores -- using the Bookmark Participants recommendation -- for the system of grade 3-8 assessments in English Language Arts and Math.

So if you've had the sense that cut scores on the BS Test are not entirely about actual students achievement, you are correct. Well, in Pennsylvania you're correct. Perhaps we're the only state factoring politics and policy concerns into our test results. Perhaps I need to stand up a minute to let the pigs fly out of my butt.

Now, the PowerPoint presentation from the meeting said that the Review Committee did not mess with the ELA recommendations of the bookmark folks at all. They admit to a few "minor" adjustments to the math, most having to do with cut scores on the lower end.

You Said Something About Ugly

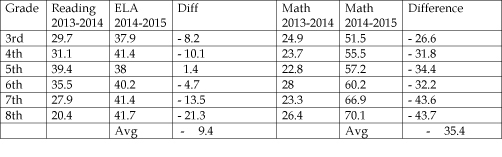

So, yes. How do the cut scores actually look? The charts from the power point do not copy well at all, and they don't provide a context. But my friend in Harrisburg created his own chart that shows how the proposed cut scores stack up to last year's results. This chart shows the percentage of students who fall into the Basic and Below Basic categories.

This raises all sorts of questions. Is this year's test measure something completely different from last year's test? Did all of Pennsylvania's teachers suddenly decide to suck last year? Is Pennsylvania in the grip of astonishing innumeracy? And most importantly, what the hell happened to the students?

Because, remember, we can read this chart a couple of ways, and one way is to follow the students -- so last year only 22.8 percent of the fifth graders "failed." But this year those exact same students, just one year older, have a 60.2 percent failure rate??!! Thirty-seven percent of those students turned into mathematical boneheads in just one year??!! Forty-seven percent of eighth graders forgot everything they learned as seventh graders??!! Seventy percent failure??!! Really????!!!! My astonishment can barely press down enough punctuation keys.

Said my Harrisburg friend, "There was some pushback from Board members, but all voting members eventually fell in line. It was clear they were ramming this through."

Farce doesn't seem too strong a word.

At a minimum, this will require an explanation of how the math abilities of Pennsylvania students or Pennsylvania teachers could fall off such a stunningly abrupt cliff.

And that's before we even get to the question of the validity of the raw data itself. Of course, none of us are supposed to be able to discuss the BS Test ever, as we've signed an Oath of Secrecy, but we've all peeked and I can tell you that I remember chunks of the 11th grade test in the same way that I remember stumbling across a rotting carcass in the woods or vivid details from how I handled my divorce -- unpleasant painful awful things tend to burn themselves into your brain. Point being, this whole exercise starts with tests that aren't very good to begin with.

If I'm teaching a class and suddenly my failure rate doubles or almost triples, I am going to be looking for things that are messed up -- and it won't be the students.

The theme of this week's meetings should have been "Holy smokes!! Something is really goobered up with our process because these results couldn't possibly be right."

The Chair of the State Board Larry Wittig; the Deputy Secretary of Education Matthew Stem; and the Chair of the Council of Basic Ed, former State Board Chair and former Erie City Superintendent James Barker apparently are the conductors on this railroad. So when it turns out that your teacher evaluation or student scores just dove straight into the toilet because of these shenanigan, be sure to call them. And here's the list of Board members,if you have some thoughts to share with them, too.

Only one of two things can possibly be true -- either all our previous years' worth of student scores in PA were a snare and a delusion, full of sound and fury and measuring nothing, or this year's test results are a crock. Okay, one other possibility -- the people who are running the testing show don't know what the heck they're talking about. Pennsylvania parents, taxpayers, and teachers, deserve to know which is the case.

A version of this post originally appeared at Curmudgucation.